UK “White Paper” on AI Regulation

The white paper was presented to parliament by the Secretary of State for Science, Innovation and Technology Michelle Donelan MP , dated 3 August 2023

Below are some of the key cybersecurity and risk takeaways:

AI enhances our response capabilities, key examples include: The Child Images Abuse Database, network security, pattern-recognition and recursive learning, and proactive cyber defence against malicious actors.

AI also increases the severity of automated targeted cyber attacks.

AI can have a significant impact on safety and security.

AI systems should be technically secure and function reliably (as intended and described).

System developers should be aware of the specific security threats that could apply at different stages of the AI life cycle and embed resilience into their systems.

Regulators may consider the National Cyber Security Centre principles for securing MLMs to evaluate if AI actors are sufficiently prioritising security measures.

Key risks AI poses include: safety, security, discrimination, human rights, agency, and privacy (strong emphasis for regulatory oversight to mitigate these risks among others).

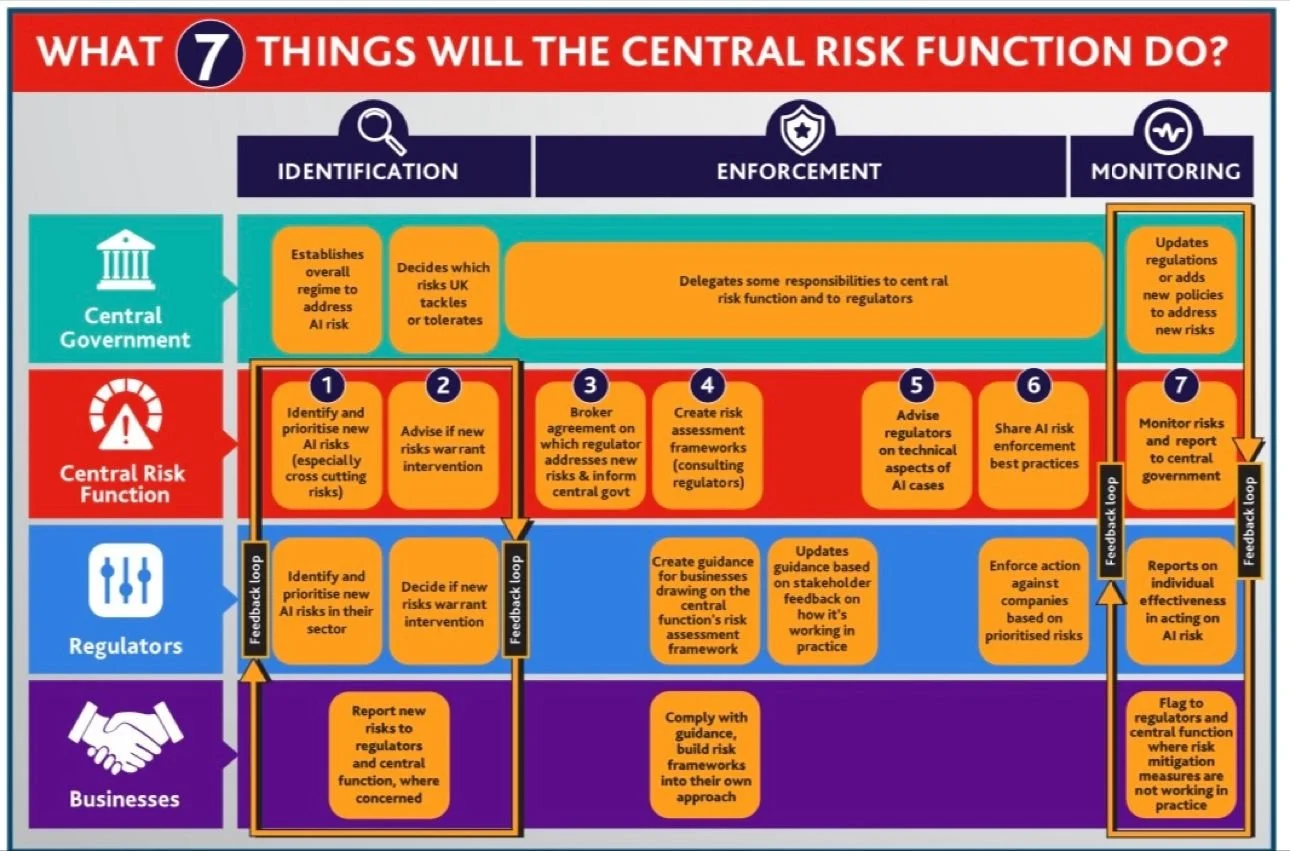

Organisations can adopt a central monitoring and evaluation (M&E) function to manage AI risk (see diagram below, source: UK White Paper on AI Regulation).

Trustworthy AI tools can be adopted to enhance assurance and risk management processes.

Key areas the UK is playing a role in AI risk management include: OECD.AI AI Governance Working Party (AI-GO), GPAI (Global Partnership on AI), G7, Council of Europe Committee on AI (CAI), UNESCO, and Global standards development organisations.

For those that are Risk Professionals, the white paper presents an interesting approach to defining and governing AI risk.

The full white paper can be found here.